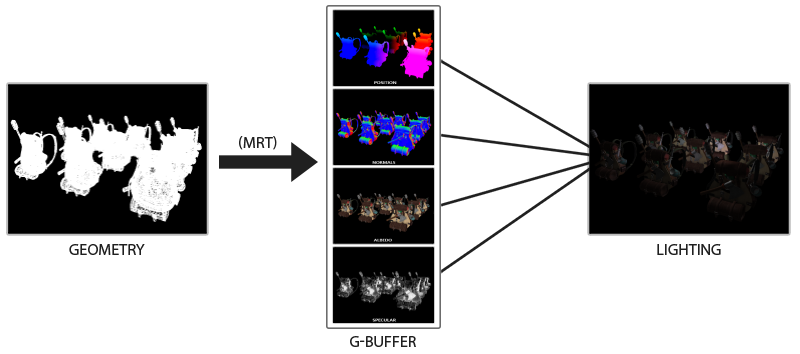

Techniques to avoid applying time-consuming shaders not in final image.

Q: How is time saved?

A: In the final pass, we only do expensive lighting computation only for pixels viewable in the final frame

Advantage:

Disadvantage:

Note: flat indicates a variable to be passed to rasterizer should not be interpolated

Some data and the space required:

Q: Why vertex normal is less precise than vertex world space position?

A: Reduced precision in world space precision causes jagged edge artifacts since there are fewer depth values, which reduces the number of fragments and makes the produced image blocky. Also leads to worse z-fighting as the likelihood of z-values colliding is higher.

When two fragments have identical z-buffer values $\in [0, 1]$ and competing fragments are randomly discarded.

Example of linear depth buffering:

\[z_\text{depth} = \frac{z - \text{near}}{\text{far} - \text{near}}\]Non-linear depth-buffering:

\[z_\text{depth} = \frac{1/\text{near} - 1/z}{1/\text{near} - 1/\text{far}}\]Ambient lighting in Phong lighting model is flat and unrealistic on non-illuminated surfaces.

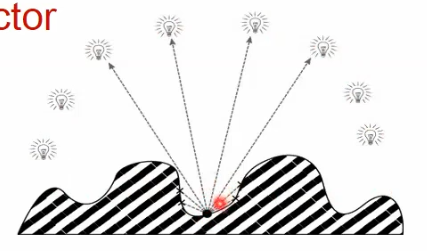

Ambient occlusion simulates illuminating diffuse surfaces by a uniform hemisphere area light source.

For each point, determine whether that point is visible from what proportion of viewpoints around the center i.e. this accessibility factor, which modulates the direct diffuse illumination vector.

In practice, this computation can be done at every vertex:

// Accessibility factor computed by raytracing from each vertex and testing for occlusion

// This does NOT work for dynamic scenes.

layout (location = 2) in float Accessbility;

...

v2fColor = Accessibility * MatlAmbient + LightDiffuse * MatlDiffuse * N_dot_L;

Limitations of pre-computed AO:

We can better harness GPU computation by approximating the solution by computing per-pixel accessibility in screen space.

Accessibility as the proportion of unoccluded rays.vec4 NZ = texelFetch(sNormalEyeZ, ivec2(gl_FragCoord.xy), 0);

vec3 N = NZ.xyz;

vec3 P = vec3(gl_FragCoord.xy, NZ.w); // w is the z-coordinate

uint occ = 0;

for (uint i = 0; i < numRays; i++) {

// uniform random_dir passed in from app

vec3 dir = random_dir[i].xyz;

// make hemispherical

if (dot(N, dir) < 0.0) dir = -dir;

// March along the ray

for (uint j = 0; j < numSteps; j++) {

// march one more step from P

vec3 Q = P + dir * (j+1) * stepSize;

thatEyeZ = texelFetch(sNormalEyeZ, ivec2(Q.xy), 0).w;

// if Q is deeper than the z-coord at it's world pos, then it is occluded.

if (Q.z < thatEyeZ) {

occ += 1.0;

// stop marching this way

break;

}

}

}

float ao_amt = float(numRays - occ) / float(numRays);

vec4 object_color = texelFetch(sColor, ivec2(gl_FragCoord.xy), 0);

// ssao_level * vec4(0.2) + (1-ssao_level) * ao_amt

FragColor = object_level * object_color + mix(vec4(0.2), vec4(ao_amt), ssao_level);